How to access object storage from CloudFerro Cloud using boto3

In this article, you will learn how to access object storage from CloudFerro Cloud using the Python library boto3.

What we are going to cover

Prerequisites

No. 1 Account

You need a CloudFerro Cloud hosting account with access to the Horizon interface: https://horizon.cloudferro.com/auth/login/?next=/.

No. 2 Generated EC2 credentials

You need to generate EC2 credentials. Learn more here: How to generate and manage EC2 credentials on CloudFerro Cloud

No. 3 A Linux or Windows computer or virtual machine

This article was written for Ubuntu 22.04 and Windows Server 2022. Other operating systems might work, but are out of scope of this article and might require you to adjust the commands supplied here.

You can use this article both on Linux or Windows virtual machines or on a local computer running one of those operating systems.

To build a new virtual machine hosted on CloudFerro Cloud cloud, one of the following articles can help:

No. 4 Additional Python libraries

Here is how to install Python 3 and the boto3 library on Ubuntu and Windows.

Ubuntu 22.04: Using virtualenvwrapper and pip

In Python, you will typically use virtual environments, as described in How to install Python virtualenv or virtualenvwrapper on CloudFerro Cloud. If your environment is called managing-files, you would use command workon to enter virtual environment and then install boto3:

workon managing-files pip3 install boto3

Ubuntu 22.04: Using apt

If you, however, do not need such an environment and want to make boto3 available globally under Ubuntu, use apt. The following command will both install Python 3 and the boto3 library:

sudo apt install python3 python3-boto3

Windows Server 2022

If you are using Windows, follow this article to learn how to install boto3: How to Install Boto3 in Windows on CloudFerro Cloud

Terminology: containers vs. buckets

There are two object storage interfaces:

Swift / Horizon – the Horizon dashboard uses the Swift API, where the top-level namespaces are called containers.

S3 / boto3 – the S3-compatible endpoint (used by boto3 and other S3 tools) uses buckets as the top-level namespaces.

Technically, containers and buckets play a similar conceptual role (they are both top-level namespaces in object storage), but they are not the same resource and are managed via different APIs.

A container created in the Horizon interface does not automatically appear as an S3 bucket for boto3, and an S3 bucket created via boto3 does not automatically show up as a container in Horizon.

In this article, we use the following terminology:

bucket when talking about S3 and boto3, and

container when referring to Swift objects visible in Horizon.

How to use the examples provided?

Each of the examples provided can serve as standalone code. You should be able to:

enter the code in your text editor,

define appropriate values for the variables,

save it as a file and

run it.

You can also use these examples as a starting point for your own code.

Note

In many examples we use boto3 or boto3_11_12_2025_09_31 as example bucket names.

If you do not already have buckets with these names, you have two options:

create a bucket with one of these names (see Creating a bucket), or

replace the example bucket names in the code with the name of any existing bucket shown by the Listing buckets example.

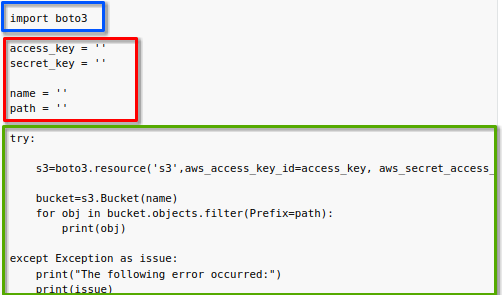

Each coding example has three parts:

- blue rectangle Import necessary libraries

It is a standard Python practice to import all libraries needed for the code later on. In this case, you first import library boto3 as well as any others if such is the case.

- red rectangle Define input parameters

A typical call in boto3 library will look like this:

s3 = boto3.resource(

"s3",

aws_access_key_id=access_key,

aws_secret_access_key=secret_key,

endpoint_url=endpoint_url,

region_name=region_name,

)

For each call, several variables must be present:

aws_access_key_id – from Prerequisite No. 2

aws_secret_access_key – also from Prerequisite No. 2

endpoint_url – s3.waw3-1.cloudferro.com

region_name – US

Important

Use the concrete value of endpoint_url for the cloud you are working with. The possible values are:

WAW4-1 https://s3.waw4-1.cloudferro.com

WAW3-1 https://s3.waw3-1.cloudferro.com

Other variables may be needed on a case by case basis:

bucket_name,

path

and possibly others. Be sure to enter the values for all those additional variables before running the examples.

- green rectangle boto3 call

Most of the preparatory code stays the same from file to file and this last part is where various actions take place. Sometimes it will be only one line of code, sometimes several, usually a loop.

Running Python code

Once you have provided all the values to the appropriate variables, you can save the file with a .py extension. One of the ways of running Python scripts is using the command line. To run such a script, first navigate to the folder in which the script is located. After that, execute the command below, but replace script.py with the name of the script you wish to run.

python3 script.py

python script.py

Make sure that the name and/or location of the file is passed to the shell correctly – watch out for any spaces and other special characters.

Important

In these examples, even in case of potentially destructive operations, you will not be asked for confirmation. If, for example, a script saves a file and a file already exists under the designated name and location, it will likely be overwritten. Please be sure that that is what you want before running the script, or enhance the code with checks whether the file already exists.

In all the examples that follow, we assume that the corresponding buckets and objects already exist unless stated otherwise. Adding code for checks of that kind is out of scope of this article.

Creating a bucket

To create a new bucket, first select a name and assign it to the bucket_name variable. On CloudFerro Cloud cloud, use only letters, numbers and hyphens.

In the rest of this article, we often use boto3 or boto3_11_12_2025_09_31 as example bucket names. You can either:

create a bucket with one of these names and use it in all examples, or

choose any other valid bucket name and use it consistently in all code samples.

boto3_create_bucket.py

import boto3

access_key = ""

secret_key = ""

bucket_name = "boto3"

endpoint_url = "https://s3.waw3-1.cloudferro.com"

region_name = "US"

try:

s3 = boto3.resource(

"s3",

aws_access_key_id=access_key,

aws_secret_access_key=secret_key,

endpoint_url=endpoint_url,

region_name=region_name,

)

s3.Bucket(bucket_name).create()

except Exception as issue:

print("The following error occurred:")

print(issue)

Successful execution of this code should produce no output.

To test whether the bucket was created, you can, among other things, list buckets as described in section Listing buckets below.

Troubleshooting creating a bucket

Bucket already exists

If you receive the following output:

The following error occurred:

An error occurred (BucketAlreadyExists) when calling the CreateBucket operation: Unknown

it means that you cannot choose this name for a bucket because a bucket under this name already exists. Choose a different name and try again.

Invalid characters used

If you used wrong characters in the bucket name, you should receive an error similar to this:

Invalid bucket name "this bucket should not exist": Bucket name must match the regex "^[a-zA-Z0-9.\-_]{1,255}$" or be an ARN matching the regex "^arn:(aws).*:(s3|s3-object-lambda):[a-z\-0-9]*:[0-9]{12}:accesspoint[/:][a-zA-Z0-9\-.]{1,63}$|^arn:(aws).*:s3-outposts:[a-z\-0-9]+:[0-9]{12}:outpost[/:][a-zA-Z0-9\-]{1,63}[/:]accesspoint[/:][a-zA-Z0-9\-]{1,63}$"

To resolve, choose a different name – on CloudFerro Cloud cloud, use only letters, numbers and hyphens.

Listing buckets

This code allows you to list buckets visible to your EC2 credentials.

boto3_list_buckets.py

import boto3

access_key = ""

secret_key = ""

endpoint_url = "https://s3.waw3-1.cloudferro.com"

region_name = "US"

try:

s3 = boto3.client(

"s3",

aws_access_key_id=access_key,

aws_secret_access_key=secret_key,

endpoint_url=endpoint_url,

region_name=region_name,

)

print(s3.list_buckets()["Buckets"])

except Exception as issue:

print("The following error occurred:")

print(issue)

The output should be a list of dictionaries, each providing information regarding a particular bucket, starting with its name. If two buckets, my-files and my-other-files, already exist, the output might be similar to this:

[{'Name': 'boto3', 'CreationDate': datetime.datetime(2025, 4, 30, 8, 52, 30, 658000, tzinfo=tzutc())},

{'Name': 'mapsrv', 'CreationDate': datetime.datetime(2025, 1, 7, 12, 43, 50, 522000, tzinfo=tzutc())},

{'Name': 'mapsrv-rasters', 'CreationDate': datetime.datetime(2025, 1, 7, 12, 44, 23, 39000, tzinfo=tzutc())}]

To simplify the output, use a for loop with a print statement to display only names of your buckets, one bucket per line:

boto3_list_one_by_one.py

import boto3

access_key = ""

secret_key = ""

endpoint_url = "https://s3.waw3-1.cloudferro.com"

region_name = "US"

try:

s3 = boto3.client(

"s3",

aws_access_key_id=access_key,

aws_secret_access_key=secret_key,

endpoint_url=endpoint_url,

region_name=region_name,

)

for bucket in s3.list_buckets()["Buckets"]:

print(bucket["Name"])

except Exception as issue:

print("The following error occurred:")

print(issue)

An example output for this code can look like this:

boto3

mapsrv

mapsrv-rasters

If you have no buckets, the output should be empty.

Checking when a bucket was created

Use this code to check the date on which a bucket was created. Enter the name of that bucket in variable bucket_name.

boto3_check_date.py

import boto3

access_key = ""

secret_key = ""

bucket_name = "boto3"

endpoint_url = "https://s3.waw3-1.cloudferro.com"

region_name = "US"

try:

s3 = boto3.resource(

"s3",

aws_access_key_id=access_key,

aws_secret_access_key=secret_key,

endpoint_url=endpoint_url,

region_name=region_name,

)

print(s3.Bucket(bucket_name).creation_date)

except Exception as issue:

print("The following error occurred:")

print(issue)

The output should contain the date the bucket was created, in the format of a Python datetime object:

2024-01-23 14:21:03.070000+00:00

Listing files in a bucket

To list files you have in a bucket, provide the bucket name in the bucket_name variable.

boto3_list_files_in_bucket.py

import boto3

access_key = ""

secret_key = ""

bucket_name = "boto3"

endpoint_url = "https://s3.waw3-1.cloudferro.com"

region_name = "US"

try:

s3 = boto3.resource(

"s3",

aws_access_key_id=access_key,

aws_secret_access_key=secret_key,

endpoint_url=endpoint_url,

region_name=region_name,

)

bucket = s3.Bucket(bucket_name)

for obj in bucket.objects.filter():

print(obj)

except Exception as issue:

print("The following error occurred:")

print(issue)

Your output should contain the list of your files, like so:

s3.ObjectSummary(bucket_name='my-files', key='some-directory/')

s3.ObjectSummary(bucket_name='my-files', key='some-directory/another-file.txt')

s3.ObjectSummary(bucket_name='my-files', key='some-directory/first-file.txt')

s3.ObjectSummary(bucket_name='my-files', key='some-directory/some-other-directory/')

s3.ObjectSummary(bucket_name='my-files', key='some-directory/some-other-directory/some-other-file.txt')

s3.ObjectSummary(bucket_name='my-files', key='some-directory/some-other-directory/yet-another-file.txt')

s3.ObjectSummary(bucket_name='my-files', key='text-file.txt')

If there are no files in your bucket, the output should be empty.

Troubleshooting listing files in a bucket

No access to bucket

If your key pair does not have access to the chosen bucket, you should get an error like this:

botocore.exceptions.ClientError: An error occurred (AccessDenied) when calling the ListObjects operation: Unknown

In this case, choose a different bucket or a different key pair if you have one which can access it.

Bucket does not exist

If a bucket you chose does not exist, the error might be:

botocore.errorfactory.NoSuchBucket: An error occurred (NoSuchBucket) when calling the ListObjects operation: Unknown

If you need a bucket which uses that name, try to create it as explained in the section Creating a bucket above.

Listing files from a particular path in a bucket

This example will list only objects from a certain path. There are two rules to follow for the path variable:

end it with a slash

do not start it with a slash.

As always, add the name of the bucket to bucket_name variable.

boto3_list_files_from_path.py

import boto3

access_key = ""

secret_key = ""

bucket_name = "boto3"

endpoint_url = "https://s3.waw3-1.cloudferro.com"

region_name = "US"

path = "some-directory/"

try:

s3 = boto3.resource(

"s3",

aws_access_key_id=access_key,

aws_secret_access_key=secret_key,

endpoint_url=endpoint_url,

region_name=region_name,

)

bucket = s3.Bucket(bucket_name)

for obj in bucket.objects.filter(Prefix=path):

print(obj)

except Exception as issue:

print("The following error occurred:")

print(issue)

A standard output should be produced, but if there are no files under chosen path, the output will be empty.

Uploading file to a bucket

To upload a file to the bucket, add the following content to variables:

Variable name |

What should it contain |

bucket_name |

The name of the bucket to which you want to upload your file. |

source_file |

The location of the file you wish to upload in your local file system. |

destination_file |

The path in your bucket under which you want to upload the file. Should only contain letters, digits, hyphens and slashes. |

Two caveats for variable destination_file:

Finish it with the name of the file you are uploading, and

Do not start or finish it with a slash.

The bucket must already exist and be visible to your EC2 credentials. You can verify this by listing buckets as shown earlier.

This is the code:

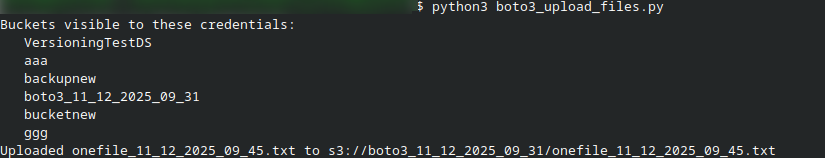

boto3_upload_files.py

#!/usr/bin/env python3

import boto3

access_key = ""

secret_key = ""

bucket_name = "boto3_11_12_2025_09_31" # replace with your bucket name

endpoint_url = "https://s3.waw3-1.cloudferro.com"

region_name = "US"

source_file = "onefile_11_12_2025_09_45.txt"

destination_file = "onefile_11_12_2025_09_45.txt" # do not start with a slash

try:

s3 = boto3.client(

"s3",

aws_access_key_id=access_key,

aws_secret_access_key=secret_key,

endpoint_url=endpoint_url,

region_name=region_name,

)

# Upload the file

s3.upload_file(source_file, bucket_name, destination_file)

print(f"Uploaded {source_file} to s3://{bucket_name}/{destination_file}")

except Exception as issue:

print("The following error occurred:")

print(issue)

This script uploads the file onefile_11_12_2025_09_45.txt from the local folder to the bucket named boto3_11_12_2025_09_31; the uploaded file will also be called onefile_11_12_2025_09_45.txt. This is what the result could look like in Terminal:

Fig. 50 Upload file to S3 bucket using boto3

The code can easily be adapted to other scenarios. For example, you can:

wrap the upload logic in a function and call it from other scripts,

add retries or more detailed error handling,

upload many files in a loop, or

log success or failure to a file.

All of this is out of scope of this article, but can be built on top of the same pattern.

Troubleshooting uploading file to a bucket

File you want to upload does not exist

If you specified a non-existent file in variable source_file, you should get an error similar to this:

The following error occurred:

[Errno 2] No such file or directory: 'here/wrong-file.txt'

To resolve, specify the correct file and try again.

Bucket does not exist or is not accessible

If the bucket does not exist or your key pair cannot access it, boto3 will typically return an AccessDenied or NoSuchBucket error. In that case, either:

choose a different bucket that appears in the bucket list, or

create a new bucket and repeat the upload.

Downloading file from a bucket

To save a file from a bucket to your local hard drive, fill in the values of the following variables and run the code below:

Variable name |

What should it contain |

bucket_name |

The name of the bucket from which you wish to download your file. |

source_key |

The path in the bucket from which you wish to download your file. |

destination_path |

The path in your local file system under which you wish to save your file. |

Do not start or finish the variable source_key with a slash.

boto3_download_file.py

import boto3

access_key = ""

secret_key = ""

bucket_name = "boto3_11_12_2025_09_31"

endpoint_url = "https://s3.waw3-1.cloudferro.com"

region_name = "US"

source_key = "onefile_11_12_2025_09_45.txt"

destination_path = "onefile2.txt"

try:

s3 = boto3.resource(

"s3",

aws_access_key_id=access_key,

aws_secret_access_key=secret_key,

endpoint_url=endpoint_url,

region_name=region_name,

)

bucket = s3.Bucket(bucket_name)

bucket.download_file(source_key, destination_path)

except Exception as issue:

print("The following error occurred:")

print(issue)

Successful execution of this code should produce no output.

Troubleshooting downloading file from a bucket

File does not exist in bucket

If a file you chose does not exist in the bucket, the following error should appear:

The following error occurred:

An error occurred (404) when calling the HeadObject operation: Not Found

To resolve, make sure that the correct file was specified in the first place.

Removing file from a bucket

To remove a file from your bucket, supply the name of the bucket to the variable bucket_name and its full path to the variable path.

The variable path is the full key of the object in the bucket (sometimes also called “object key” in S3 terminology).

boto3_remove_file_from_bucket.py

import boto3

access_key = ""

secret_key = ""

bucket_name = "boto3_11_12_2025_09_31"

endpoint_url = "https://s3.waw3-1.cloudferro.com"

region_name = "US"

path = "onefile_11_12_2025_09_45.txt"

try:

s3 = boto3.resource(

"s3",

aws_access_key_id=access_key,

aws_secret_access_key=secret_key,

endpoint_url=endpoint_url,

region_name=region_name,

)

s3.Object(bucket_name, path).delete()

except Exception as issue:

print("The following error occurred:")

print(issue)

Successful execution of this code should produce no output.

Removing a bucket

To remove a bucket, first remove all objects from it. Once it is empty, define variable bucket_name for the bucket you want to remove and execute the code below:

boto3_remove_bucket.py

import boto3

access_key = ""

secret_key = ""

bucket_name = "boto3_11_12_2025_09_31"

endpoint_url = "https://s3.waw3-1.cloudferro.com"

region_name = "US"

try:

s3 = boto3.resource(

"s3",

aws_access_key_id=access_key,

aws_secret_access_key=secret_key,

endpoint_url=endpoint_url,

region_name=region_name,

)

s3.Bucket(bucket_name).delete()

except Exception as issue:

print("The following error occurred:")

print(issue)

Successful execution of this code should produce no output.

Troubleshooting removing a bucket

Bucket does not exist or is unavailable to your key pair

If the bucket does not exist or is unavailable for your key pair, you should get the following output:

The following error occurred:

An error occurred (NoSuchBucket) when calling the DeleteBucket operation: Unknown

Bucket is not empty

If the bucket is not empty, it cannot be deleted. The message will be:

The following error occurred:

An error occurred (BucketNotEmpty) when calling the DeleteBucket operation: Unknown

To resolve, remove all objects from the bucket and try again.

General troubleshooting

No connection to the endpoint

If you do not have a connection to the endpoint (for example because you lost Internet connection), you should get output similar to this:

The following error occurred:

Could not connect to the endpoint URL: "https://s3.waw3-1.cloudferro.com/"

If that is the case, make sure that you are connected to the Internet. If you are sure that you are connected to the Internet and no downtime for CloudFerro Cloud object storage was announced, contact CloudFerro Cloud customer support: Helpdesk and Support

Wrong credentials

If the access and/or secret keys are wrong, a message like this will appear:

The following error occurred:

An error occurred (InvalidAccessKeyId) when calling the ListBuckets operation: Unknown

Refer to Prerequisite No. 2 for how to obtain valid credentials.

Bucket does not exist or is unavailable to your key pair

If the bucket you chose for this code does not exist or is unavailable for your key pair, you can get different outputs depending on the command you are executing. Below are two such examples:

None

The following error occurred:

An error occurred (NoSuchBucket) when calling the DeleteObject operation: Unknown

What To Do Next

Another tool for accessing object storage in the cloud is s3cmd: How to access object storage from CloudFerro Cloud using s3cmd.